Election Timeline

- Election announced: April 15, 2025

- Parliament dissolved: April 15, 2025

- Nomination Day: April 23, 2025

- Campaign period: April 23 to May 2, 2025

- Cooling-off Day: May 2, 2025

- Polling Day: May 3, 2025

Key Campaign Guidelines

New Online Content Rules

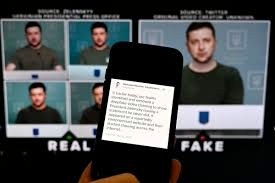

- For the first time, a law bans fake or digitally altered online content that misrepresents candidates.

- This includes AI-generated content, Photoshopped material, manipulated audio/video, or spliced clips that change meaning.

- The Elections Department (ELD) will maintain a daily updated list of prospective candidates on their website.

Banners, Flags and Posters

- No new election advertising displays until after the nomination proceedings on April 23

- Existing displays can remain if they were legally displayed before April 15, haven’t been modified, and are declared within 12 hours.

- Permanent location markers for party offices are exempt from these rules

Online Campaigning

- Allowed through social media, websites, podcasts, and emails

- Only political parties, candidates, and election agents can publish paid online ads

- Singapore citizens can post unpaid ads individually

- All online ads must display the names of the people involved in publishing them

- All election advertising must stop during the Cooling-off Period (May 2-3)

Films in Campaigning

- Party political films are generally prohibited.

- Exceptions include factual/objective films, live recordings of legal gatherings, and films showing party manifestos without animation/dramatic elements.

Other Restrictions

- Publication of election surveys and exit polls is prohibited from Writ issuance until the close of polling.

- Only Singapore citizens can participate in elections and campaigning

- Negative campaigning based on hate or false statements is discouraged

- Those under 16 years of age and foreigners are prohibited from election activities

The article emphasizes transparency, preventing foreign interference, and maintaining the integrity of Singapore’s electoral process.

Analysis of Processes to Combat Misinformation and Foreign Interference in Singapore’s GE2025

Looking at the article, Singapore has implemented several processes to address fake news, misleading advertising, and foreign interference during the 2025 General Election:

Combating Fake News and Manipulated Content

- New Digital Content Manipulation Law

- First-time implementation in GE2025

- Specifically bans “digitally generated or manipulated content that realistically misrepresents a prospective candidate’s speech or actions”

- Covers content created using:

- Generative AI

- Photo editing techniques

- Audio manipulation (dubbing)

- Video manipulation (splicing/cutting to alter meaning)

- Transparency Requirements

- All online election advertisements must display the names of the people involved in publishing.

- Paid advertisements must include a statement indicating they were sponsored/paid for and who funded the.m

- Creates accountability trail for content

Preventing Misleading Election Information

- Survey and Poll Restrictions

- Ban on publishing election survey results from Writ issuance until close of polling.

- Prohibit the creation of social media polls/surveys where results can be viewed

- Prevents reposting of election survey results

- Limits potentially misleading or unscientific polling that could influence voters

- Film Content Regulations

- Prohibition on party political films that could distort facts

- Exceptions only for “factual and objective” films that “do not present a distorted picture of facts”

- Creates barriers to emotionally manipulative or misleading video content

Countering Foreign Interference

- Citizenship Requirements

- Only Singapore citizens can participate in elections and campaigning

- Explicit prohibition against foreigners and foreign entities participating in election activities

- Candidates warned against “becoming vectors or victims” of foreign interference

- Authorization Requirements

- Anyone conducting activities supporting a candidate must be individually authorized in writing.

- Creates a paper trail of who is legitimately involved in campaigns

- Makes it more challenging for unauthorized foreign actors to claim association

- Warning Against Foreign Support

- Candidates are explicitly told not to solicit foreign support for their campaigns.

- Advisory to be alert to “suspicious behaviours and hidden agendas”

Enforcement Mechanisms

- Designated Enforcement Authority

- Aetos Security Management is authorized to enforce display rules

- Political parties are given three hours to remove non-compliant materials when notified

- Non-compliance results in removal at the candidate’s expense

- Implied Use of Existing Laws

- Reference to using FICA (Foreign Interference Countermeasures Act) and POFMA (Protection from Online Falsehoods and Manipulation Act) for social media manipulation

- Established legal framework to address more serious violations

Assessment of Singapore’s Approach

The approach appears comprehensive but heavily regulatory, focusing on prohibition rather than media literacy. While it creates multiple barriers to misinformation and foreign influence, the effectiveness will depend on:

- Detection capabilities for digitally manipulated content

- Speed of enforcement during the short campaign period

- Clarity in distinguishing between legitimate campaigning and prohibited content

- Balance between preventing misinformation and allowing robust political discourse

The system emphasizes maintaining the integrity of Singapore’s electoral process by ensuring “the outcome of Singapore’s elections must be for Singaporeans alone to decide.”

Singapore’s Approach to Fake News and Foreign Interference

Singapore’s strict approach to fake news and foreign interference in elections stems from several interconnected concerns:

Concerns About Fake News

- Social Cohesion Preservation

- Singapore’s multiracial, multireligious society is considered vulnerable to divisions.

- Misinformation can exacerbate tensions between ethnic and religious communities.

- The article warns explicitly against statements that “may cause racial or religious tensions or affect social cohesion”

- Electoral Integrity Protection

- Fake news can undermine fair democratic processes by misleading voters

- The emphasis on “upholding the truthfulness of representation during an election” suggests a concern that voter decisions should be based on accurate information

- Rules against manipulated content protect candidates from being misrepresented

- National Security Framework

- Singapore views information integrity as part of national security

- The comprehensive regulatory approach aligns with Singapore’s general governance philosophy of preemptive control of potential threats

Concerns About Foreign Interference

- Sovereignty Protection

- The article explicitly states “the outcome of Singapore’s elections must be for Singaporeans alone to decide”

- Foreign influence is seen as fundamentally undermining electoral sovereignty.

- The emphasis on citizenship requirements for all election activities reinforces this boundary.

- Regional Geopolitical Context

- Singapore’s position in Southeast Asia, with larger neighbors and significant power competition in the region, makes it sensitive to external influence.

- The comprehensive measures reflect awareness of how foreign actors have attempted to influence elections in other countries.

- Digital Vulnerability

- The mention of cybersecurity threats during the election season indicates awareness of technical vulnerabilities.

- Reference to coordination with tech platforms suggests recognition that foreign interference often operates through social media and digital channels.

Historical Context

While not explicit in the article, Singapore’s approach should be understood within its historical development:

- The experience of communal tensions in its early history influences the government’s caution about divisive content

- Singapore’s governance model has consistently prioritized stability and control over unrestricted expression

- Previous elections globally have demonstrated how misinformation and foreign interference can disrupt democratic processes.

The comprehensive regulatory framework in Singapore’s electoral guidelines reflects these concerns, with multiple overlapping systems designed to maintain information integrity and prevent external influence in what the government considers a purely domestic democratic process.

Foreign Interference Cases Affecting Singapore’s National Security

While the article doesn’t detail specific cases of foreign interference in Singapore, I can provide context about both historical and contemporary cases that have shaped Singapore’s vigilant stance toward foreign interference in elections and national security matters.

Historical Cases

Operation Coldstore (1960s)

- While primarily a domestic security operation, it was conducted partly due to concerns about foreign Communist influence

- The Singapore government viewed some political opposition as potentially influenced by foreign Communist elements.

- This period shaped Singapore’s early stance on foreign political interference

Hendrickson Affair (1988)

- An American diplomat was expelled from Singapore for allegedly interfering in domestic politics.

- Accused of encouraging opposition politicians and involvement with civil society groups

- Heightened Singapore’s sensitivity to Western diplomatic engagement with opposition

Information Operations During Malaysia-Singapore Tensions

- During periods of bilateral tension, information campaigns allegedly originating from across borders have attempted to inflame disagreements

- These have included water rights disputes and territorial claims

- Reinforced Singapore’s view that information warfare is a genuine national security concern

Contemporary Cases

Chinese Influence Operations

- In 2017, Singapore expelled a Chinese-born professor for allegedly being an “agent of influence”

- Singapore has been cautious about Chinese influence through cultural, business, and academic channels.

- Officials have warned about potential United Front Work Department activities.

Online Influence Operations

- Singapore security agencies have reported detecting foreign-originated social media campaigns targeting domestic issues.

- These campaigns allegedly attempted to exploit social divides on topics like immigration and race relations.

- Led to the development of POFMA (Protection from Online Falsehoods and Manipulation Act)

FICA Development Context (2021)

- The Foreign Interference (Countermeasures) Act was introduced after officials cited evidence of hostile information campaigns.

- Officials referenced “hostile information campaigns” from unnamed foreign actors.

- The law was justified by citing interference examples from other countries, including electoral interference in the US and Europe.

Global Context Influencing Singapore’s Approach

Russian Interference in US and European Elections

- Well-documented Russian campaigns targeting electoral processes

- Use of social media, hack-and-leak operations, and amplification of divisive content

- Demonstrates how foreign actors can destabilize even established democracies

Chinese Influence in Regional Politics

- Reports of Chinese influence operations throughout Southeast Asia

- Economic leverage used for political influence

- Creates heightened regional awareness of interference tactics

Information Operations During COVID-19

- Singapore officials have cited foreign-originated misinformation during the pandemic.

- Created additional justification for strong controls on information flows

- Reinforced the link between information security and national security

Singapore’s comprehensive approach to preventing foreign interference in elections reflects these experiences and its assessment that small, multiracial states with open economies are particularly vulnerable to such interference. The regulatory framework aims to preserve what officials describe as “cognitive sovereignty” – ensuring Singaporeans make electoral decisions free from external manipulation.

Analysis of the Deepfake Scam in Singapore

The Scam Anatomy

This case demonstrates a sophisticated business email compromise (BEC) attack enhanced with deepfake technology:

- Initial Contact: Scammers first impersonated the company’s CFO via WhatsApp

- Trust Building: They scheduled a seemingly legitimate video conference about “business restructuring”

- Technology Exploitation: Used deepfake technology to impersonate multiple company executives, including the CEO

- Pressure Tactics: Added legitimacy with a fake lawyer requesting NDA signatures

- Financial Extraction: Convinced the finance director to transfer US$499,000

- Escalation Attempt: Requested an additional US$1.4 million, which triggered suspicion

What makes this attack particularly concerning is the multi-layered approach, which combines social engineering, technological deception, and psychological manipulation.

Anti-Scam Support in Singapore

Singapore has established a robust anti-scam infrastructure:

- Anti-Scam Centre (ASC): The primary agency that coordinated the response in this case

- Cross-Border Collaboration: Successfully worked with Hong Kong’s Anti-Deception Coordination Centre (ADCC)

- Financial Institution Partnership: HSBC’s prompt cooperation with authorities was crucial

- Rapid Response: The ASC successfully recovered the full amount within 3 days of the fraud

Singapore’s approach demonstrates the value of having specialized anti-scam units with established international partnerships and banking sector integration.

Deepfake Scam Challenges in Singapore

Singapore faces specific challenges with deepfake scams:

- Financial Hub Vulnerability: As a global financial center, Singapore businesses are high-value targets

- Technological Sophistication: Singapore’s tech-savvy business environment may paradoxically create overconfidence

- Multinational Environment: Companies with international operations face complexity in verifying communications

- Cultural Factors: Hierarchical business structures may make employees hesitant to question apparent leadership directives

Preventative Measures and Future Concerns

To address these challenges, Singapore authorities recommend:

- Establishing formal verification protocols for executive communications

- Training employees specifically on deepfake awareness

- Implementing multi-factor authentication for financial transfers

- Creating organizational cultures where questioning unusual requests is encouraged

The incident highlights the need for both technological and human-centered safeguards in an environment where AI technology continues to advance rapidly.

Notable Deepfake Scams Beyond the Singapore Case

Corporate Deepfake Incidents

Hong Kong $25 Million Heist (2023) The article mentions a similar case in Hong Kong, where a multinational corporation lost HK$200 million (approximately US$25 million) after an employee participated in what appeared to be a legitimate video conference. All participants except the victim were AI-generated deep fakes. This represents one of the largest successful deepfake financial frauds to date.

UK Energy Company Scam (2019) In one of the first major reported cases, criminals used AI voice technology to impersonate the CEO of a UK-based energy company. They convinced a senior financial officer to transfer €220,000 (approximately US$243,000) to a Hungarian supplier. The voice deepfake was convincing enough to replicate the CEO’s slight German accent and speech patterns.

Consumer-Targeted Deepfake Scams

Celebrity Investment Scams: Deepfake videos of celebrities like Elon Musk, Bill Gates, and various financial experts have been used to promote fraudulent cryptocurrency investment schemes. These videos typically show the celebrity “endorsing” a platform that promises unrealistic returns.

Political and Public Trust Manipulation While not always for direct financial gain, deep fakes of political figures making inflammatory statements have been deployed to manipulate public opinion or disrupt elections. These erode trust in legitimate information channels and can indirectly facilitate other scams.

Dating App Scams Scammers have used deepfake technology to create synthetic profile videos on dating apps, establishing trust before moving to romance scams. This represents a technological evolution of traditional romance scams.

Emerging Deepfake Threats

Real-time Video Call Impersonation Advancements now allow for real-time facial replacement during video calls, making verification protocols that rely on video confirmation increasingly vulnerable.

Voice Clone Scams Targeting Families Cases have emerged where scammers use AI to clone a family member’s voice, then call relatives claiming to be in an emergency situation requiring immediate financial assistance.

Deepfake Identity Theft Beyond financial scams, deepfakes are increasingly used for identity theft to access secure systems, bypass biometric security, or create fraudulent identification documents.

Global Response Trends

Different jurisdictions are responding to these threats with varying approaches:

- EU: Implementing broad AI regulations that include provisions for deepfake disclosure

- China: Instituting specific regulations against deepfakes requiring clear labeling

- United States: Developing industry standards and focusing on detection technology

- Southeast Asia: Establishing regional cooperation frameworks similar to the Singapore-Hong Kong collaboration mentioned in the article

The proliferation of these scams highlights the need for continued technological countermeasures in addition to traditional fraud awareness training and verification procedures.

A finance worker at a multinational company was tricked into transferring $25 million (about 200 million Hong Kong dollars) to fraudsters after participating in what they thought was a legitimate video conference call.

The scam involved:

- The worker initially received a suspicious message supposedly from the company’s UK-based CFO about a secret transaction

- Though initially skeptical, the workers’ doubts were overcome when they joined a video conference call.

- Everyone on the call appeared to be colleagues they recognized, but all participants were actually deepfake recreations.

- The scam was only discovered when the worker later checked with the company’s head office,

Hong Kong police reported making six arrests connected to such scams. They also noted that in other cases, stolen Hong Kong ID cards were used with AI deepfakes to trick facial recognition systems for fraudulent loan applications and bank account registrations.

This case highlights the growing sophistication of deepfake technology and the increasing concerns about its potential for fraud and other harmful uses.

Analyzing Deepfake Scams: The New Frontier of Digital Fraud

The Hong Kong Deepfake CFO Case: A Sophisticated Operation

The $25 million Hong Kong scam represents a significant evolution in financial fraud tactics. What makes this case particularly alarming is:

- Multi-layered deception – The scammers created not just one convincing deepfake but multiple synthetic identities of recognizable colleagues in a conference call setting

- Targeted approach – They specifically chose to impersonate the CFO, a high-authority figure with legitimate reasons to request financial transfers.

- Social engineering – They overcame the victim’s initial skepticism by creating a realistic social context (the conference call) that normalized the unusual request.

The Growing Threat Landscape of Deepfake Scams

Deepfake-enabled fraud is expanding in several concerning directions:

1. Financial Fraud Variations

- Executive impersonation – Like the Hong Kong case, targeting finance departments by mimicking executives

- Investment scams – Creating fake testimonials or celebrity endorsements for fraudulent schemes

- Banking verification – Bypassing facial recognition and voice authentication systems

2. Identity Theft Applications

- As seen in the Hong Kong ID card cases, deepfakes are being used to create synthetic identities for:

- Loan applications

- Bank account creation

- Bypassing KYC (Know Your Customer) protocols

3. Technical Evolution

- Reduced technical barriers – Creating convincing deepfakes once required significant technical expertise and computing resources, but user-friendly tools are making this technology accessible.

- Quality improvements – The technology is rapidly advancing in realism, making detection increasingly tricky.

- Real-time capabilities – Live video manipulation is becoming more feasible

Why Deepfake Scams Are Particularly Effective

Deepfake scams exploit fundamental human cognitive and social vulnerabilities:

- Trust in visual/audio evidence – Humans are naturally inclined to trust what they see and hear

- Authority deference – People tend to comply with requests from authority figures

- Social proof – The presence of multiple, seemingly legitimate colleagues creates a sense of normalcy

- Security fatigue – Even security-conscious individuals can become complacent when faced with seemingly strong evidence

Protection Strategies

For Organizations

- Multi-factor verification protocols – Implement out-of-band verification for large transfers (separate communication channels)

- Code word systems – Establish private verification phrases known only to relevant parties

- AI detection tools – Deploy technologies that can flag potential deepfakes

- Training programs – Educate employees about these threats with specific examples

For Individuals

- Healthy skepticism – Question unexpected requests, especially those involving finances or sensitive information

- Verification habits – Use different communication channels to confirm unusual requests.

- Context awareness – Be especially vigilant when urgency or secrecy is emphasised.

- Technical indicators – Look for inconsistencies in deepfakes (unnatural eye movements, lighting inconsistencies, audio-visual misalignment)

The Future of Deepfake Fraud

The deepfake threat is likely to evolve in concerning ways:

- Targeted personalization – Using information gleaned from social media to create more convincing personalized scams

- Hybrid attacks – Combining deepfakes with other attack vectors like compromised email accounts

- Scalability – Automating parts of the scam process to target more victims simultaneously

As this technology continues to advance, the line between authentic and synthetic media will become increasingly blurred, requiring both technological countermeasures and a fundamental shift in how we verify identity and truth in digital communications.

What Are Deepfake Scams?

Deepfake scams involve using artificial intelligence (AI) technology to create compelling fake voice recordings or videos that impersonate real people. The goal is typically to trick victims into transferring money or taking urgent action.

Key Technologies Used

- Voice cloning: Requires just 10-15 seconds of original audio

- Face-swapping: Uses photos from social media to create fake video identities

- AI-powered audio and video manipulation

How Scammers Operate

Emotional Manipulation

Scammers exploit human emotions like:

- Creating Urgency: The primary goal is to make victims act quickly without rational thought.

Real-World Examples

- In Inner Mongolia, a victim transferred 4.3 million yuan after a scammer used face-swapping technology to impersonate a friend during a video call.

- Growing concerns in Europe about audio deepfakes mimicking family members’ voices

How to Protect Yourself

Identifying Fake Content

- Watch for unnatural lighting changes

- Look for strange blinking patterns

- Check lip synchronization

- Be suspicious of unusual speech patterns

Safety Practices

- Never act immediately on urgent requests

- Verify through alternative communication channels

- Contact the supposed sender through known, trusted methods

- Remember: “Seeing is not believing” in the age of AI

Expert Insights

“When a victim sees a video of a friend or loved one, they tend to believe it is real and that they are in need of help.” – Associate Professor Terence Sim, National University of Singapore

Governmental Response

Authorities like Singapore’s Ministry of Home Affairs are:

- Monitoring the technological threat

- Collaborating with research institutes

- Working with technology companies to develop countermeasures

Conclusion

Deepfake technology represents a sophisticated and evolving threat to personal and financial security. Awareness, skepticism, and verification are key to protecting oneself.

What Are Deepfake Scams?

Deepfake scams involve using artificial intelligence (AI) technology to create compelling fake voice recordings or videos that impersonate real people. The goal is typically to trick victims into transferring money or taking urgent action.

Key Technologies Used

- Voice cloning: Requires just 10-15 seconds of original audio

- Face-swapping: Uses photos from social media to create fake video identities

- AI-powered audio and video manipulation

How Scammers Operate

- Emotional Manipulation Scammers exploit human emotions like:

- Fear

- Excitement

- Curiosity

- Guilt

- Sadness

- Creating Urgency: The primary goal is to make victims act quickly without rational thought.

Real-World Examples

- In Inner Mongolia, a victim transferred 4.3 million yuan after a scammer used face-swapping technology to impersonate a friend during a video call.

- Growing concerns in Europe about audio deepfakes mimicking family members’ voices

How to Protect Yourself

Identifying Fake Content

- Watch for unnatural lighting changes

- Look for strange blinking patterns

- Check lip synchronization

- Be suspicious of unusual speech patterns

Safety Practices

- Never act immediately on urgent requests

- Verify through alternative communication channels

- Contact the supposed sender through known, trusted methods

- Remember: “Seeing is not believing” in the age of AI

Expert Insights

“When a victim sees a video of a friend or loved one, they tend to believe it is real and that they are in need of help.” – Associate Professor Terence Sim, National University of Singapore

Governmental Response

Authorities like Singapore’s Ministry of Home Affairs are:

- Monitoring the technological threat

- Collaborating with research institutes

- Working with technology companies to develop countermeasures

Conclusion

Deepfake technology represents a sophisticated and evolving threat to personal and financial security. Awareness, skepticism, and verification are key to protecting oneself.

Maxthon

Maxthon has set out on an ambitious journey aimed at significantly bolstering the security of web applications, fueled by a resolute commitment to safeguarding users and their confidential data. At the heart of this initiative lies a collection of sophisticated encryption protocols, which act as a robust barrier for the information exchanged between individuals and various online services. Every interaction—be it the sharing of passwords or personal information—is protected within these encrypted channels, effectively preventing unauthorised access attempts from intruders.

Maxthon private browser for online privacyThis meticulous emphasis on encryption marks merely the initial phase of Maxthon’s extensive security framework. Acknowledging that cyber threats are constantly evolving, Maxthon adopts a forward-thinking approach to user protection. The browser is engineered to adapt to emerging challenges, incorporating regular updates that promptly address any vulnerabilities that may surface. Users are strongly encouraged to activate automatic updates as part of their cybersecurity regimen, ensuring they can seamlessly take advantage of the latest fixes without any hassle. Maxthon Browser Windows 11 support

In today’s rapidly changing digital environment, Maxthon’s unwavering commitment to ongoing security enhancement signifies not only its responsibility toward users but also its firm dedication to nurturing trust in online engagements. With each new update rolled out, users can navigate the web with peace of mind, assured that their information is continuously safeguarded against ever-emerging threats lurking in cyberspace.

Copyright @ Maxthon 2024