The Singapore Police Force and Ministry of Digital Development and Information have highlighted several essential concerns:

- They’re warning against spreading misinformation, online harassment, and content that could incite racial or religious tensions.

- The authorities specifically mentioned the risk of deepfakes, citing a recent incident involving former president Halimah Yacob, who reportedly filed a police report about a fake video showing her making negative comments about the Government.

- The statement outlines several laws under which offenders could be prosecuted:

- Miscellaneous Offences Act

- Protection from Online Falsehoods and Manipulation Act

- Parliamentary Elections Act

- Protection from Harassment Act

- Penal Code

- Maintenance of Religious Harmony Act

- The key dates mentioned are Nomination Day on April 23 and polling on May 3, 2025.

This appears to be part of Singapore’s efforts to maintain order and prevent divisive content during a sensitive political period.

Analysis of Election-Related Online Threats and Prevention Strategies

Fake News During Elections

Elections are particularly vulnerable to misinformation for several reasons:

- High stakes environment: The competitive nature of elections creates incentives for actors to spread misleading content to influence outcomes.

- Speed of information flow: During election periods, there’s pressure to consume and share information quickly, often before proper verification.

- Technological evolution: As seen in Singapore’s warning about deepfakes, advanced AI technologies make fabricated content increasingly convincing and difficult to detect.

- Emotional engagement: Election content often triggers strong emotional responses, making people more likely to share without critical evaluation.

Online Aggressive Behavior During Elections

Political tensions frequently manifest as aggressive online behaviour during elections:

- Polarization: Election periods naturally emphasise differences, which can intensify tribalism and hostile communication.

- Doxxing and harassment: The Singapore authorities specifically warned against publishing personal information to harass political figures or voters.

- Racial and religious tensions: Elections can sometimes be exploited to inflame existing social divisions, as highlighted in Singapore’s warning about content that could “wound racial feelings.”

- Intimidation tactics: Online aggression may attempt to silence sure voters or discourage participation.

Prevention Strategies

Effective prevention requires multi-layered approaches:

- Legal frameworks: Singapore employs multiple laws to address different aspects of online misconduct, creating comprehensive coverage.

- Media literacy initiatives: Teaching citizens to critically evaluate information sources, identify manipulation techniques, and verify claims before sharing.

- Platform responsibility: Social media companies can implement election-specific measures like enhanced fact-checking, clear labelling of synthetic media, and temporary changes to sharing algorithms.

- Official information channels: Establishing authoritative sources for election information helps counter misinformation.

- Cross-sector collaboration: Coordination between government agencies, technology companies, civil society, and media organisations strengthens monitoring and response capabilities.

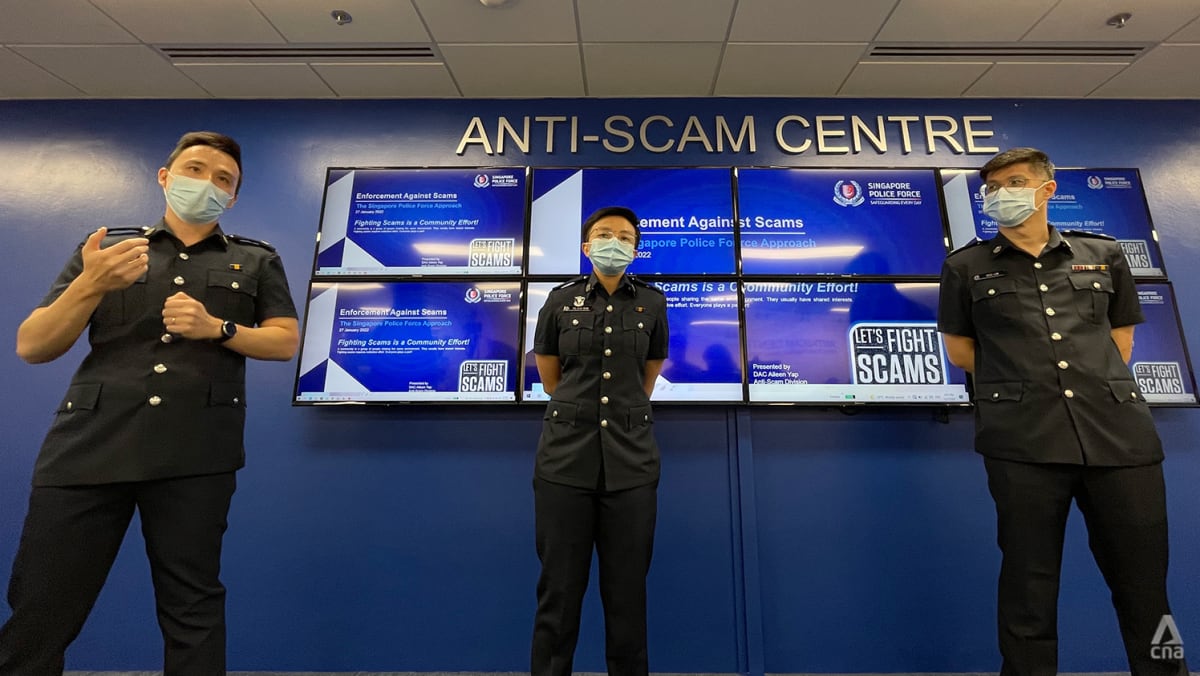

Anti-Scam Protection

Election periods often see increases in politically themed scams:

- Donation scams: Fraudsters impersonate candidates or parties soliciting donations.

- Registration scams: Fake websites or messages claiming to help with voter registration while stealing personal information.

- Misinformation about voting procedures: False information about voting locations, times, or methods to suppress participation.

Protection strategies include:

- Official verification channels: Directing voters to use only official government websites for election information.

- Digital hygiene practices: Reinforcing basic security measures like checking website URLs, being suspicious of unsolicited messages, and verifying requests through official channels.

- Reporting mechanisms: Establishing clear pathways for citizens to report suspected scams or misinformation.

- Public education campaigns: Proactive alerts about common election-related scams and how to avoid them.

Singapore’s approach demonstrates how countries are increasingly recognising that protecting electoral integrity requires addressing both the technological and social dimensions of online threats.

Mitigating Online Aggressive and Harmful Conduct

Understanding the Problem

Online aggression and harmful conduct manifest in various forms:

- Direct harassment: Targeted threats, insults, and intimidation

- Coordinated attacks: Mobilising groups to target individuals

- Hate speech: Content attacking individuals based on identity

- Disinformation campaigns: Spreading false information to inflame tensions

- Doxxing: Publishing private information without consent

Mitigation Strategies

Technical Solutions

- Content moderation systems

- AI-powered detection of harmful language and threats

- User-reporting mechanisms with rapid response protocols

- Content throttling for potentially inflammatory material

- Temporary conversation “cooling” periods during heightened tensions

- Platform design modifications

- Friction points before posting emotional content (“Are you sure?”)

- Default privacy settings that protect vulnerable users

- Circuit breakers that limit virality of borderline content

- Content labels providing context for controversial topics

- Identity verification options

- Tiered verification systems balancing anonymity and accountability

- Pseudonymous authentication that maintains privacy while reducing bad actors

- Reputation systems rewarding constructive participation

Social and Educational Approaches

- Digital citizenship education

- School curricula on responsible online communication

- Public awareness campaigns about online behaviour impact

- Community workshops on conflict de-escalation techniques

- Community building

- Establishing clear norms and expectations for online spaces

- Training community moderators in de-escalation techniques

- Creating positive incentives for constructive dialogue

- Building platforms for diverse engagement across different viewpoints

- Media literacy programs

- Training on emotional manipulation techniques

- Tools to identify divisive content designed to trigger outrage

- Critical evaluation skills for information sources

Legal and Policy Frameworks

- Balanced regulation

- Clear legal definitions of harmful conduct with proportional consequences

- Protections for legitimate free expression while addressing genuine harm

- International coordination on cross-border enforcement

- Platform accountability

- Transparency requirements on content moderation policies and outcomes

- Due process requirements for content removal

- Requirements for accessible reporting mechanisms

- Specialised response units

- Law enforcement teams trained in digital investigation

- Cross-sector coordination between platforms, civil society, and authorities

- Rapid response protocols for threats of physical harm

Psychological Approaches

- Bystander intervention training

- Teaching witnesses how to safely intervene in online harassment

- Creating cultures where silence is not the default response

- Support systems for targets

- Accessible mental health resources for those experiencing online abuse

- Technical support for securing accounts and personal information

- Peer support networks for sharing coping strategies

- Rehabilitation approaches

- Programs addressing underlying causes of harmful behaviour

- Restorative justice options where appropriate

Implementation Challenges

Effective mitigation must navigate several tensions:

- Free expression vs. harm prevention: Balancing open discourse with protection from genuine harm

- Anonymity vs. accountability: Preserving privacy benefits while reducing consequence-free hostility

- Speed vs. accuracy: Responding quickly while ensuring fairness

- Universal vs. contextual: Developing global standards while respecting cultural differences

- Technology vs. human judgment: Balancing automated systems with human review

The most effective approaches combine multiple strategies tailored to specific contexts, recognising that online aggression has complex social, psychological, and technical dimensions that require coordinated solutions.

Maxthon

Maxthon has set out on an ambitious journey aimed at significantly bolstering the security of web applications, fueled by a resolute commitment to safeguarding users and their confidential data. At the heart of this initiative lies a collection of sophisticated encryption protocols, which act as a robust barrier for the information exchanged between individuals and various online services. Every interaction—be it the sharing of passwords or personal information—is protected within these encrypted channels, effectively preventing unauthorised access attempts from intruders.

This meticulous emphasis on encryption marks merely the initial phase of Maxthon’s extensive security framework. Acknowledging that cyber threats are constantly evolving, Maxthon adopts a forward-thinking approach to user protection. The browser is engineered to adapt to emerging challenges, incorporating regular updates that promptly address any vulnerabilities that may surface. Users are strongly encouraged to activate automatic updates as part of their cybersecurity regimen, ensuring they can seamlessly take advantage of the latest fixes without any hassle.

In today’s rapidly changing digital environment, Maxthon’s unwavering commitment to ongoing security enhancement signifies not only its responsibility toward users but also its firm dedication to nurturing trust in online engagements. With each new update rolled out, users can navigate the web with peace of mind, assured that their information is continuously safeguarded against ever-emerging threats lurking in cyberspace.